As an avid fan of the late, great Douglas Adams, I am always in the hunt for excuses to regale (read: bore) my colleagues with his greatest works in the Hitchikers Guide to the Galaxy (HHGG), Trilogy of Four (yes, you read right, a 4 part series of 3). A few weeks ago, I was provided with exactly that opportunity when, can you imagine, in all possible places, I was at the South African Fraud Summit where Lee Naik (CEO: TransUnion Africa) made an interesting statement, and I roughly quote: ‘Artificial Intelligence has all the answers… it just needs the right question’.

HHGG fans may recall Deep Thought, the supercomputer that was tasked to determine the meaning of ‘Life, the Universe and Everything’, which it crunched over a period of 7.5 million years to arrive at the simple but infinitely confusing answer ‘42’, which Deep Thought then admitted was quite meaningless as the question was never clear in the first place. Then starts the process to design and even more powerful super-duper mega-computer which would take 10 million years to compute ‘the Ultimate Question’.

Sound familiar? Machine learning (ML), Neural Networking and it’s creative descriptors and differentiators including Artificial (ANN), Deep (DNN), Convolutional (CNN) etc etc, under the vague banner of Artificial Intelligence (AI) is spawning a multitude of Intelligent Machines that are able to answer, er, what is the question again?

In the case of user security, thankfully the priority questions are fairly definitive, and limited to:

- Identification – who is the person (or Bot, but that’s for later)

- Verification – how can the identity, claimed or inferred, be validated

- Fraud Management – essentially the inverse of Identification and Authentication

At Opus Research, we call this Intelligent Authentication (#IAuth).

Making Sense of Sensors, Sensors and More Sensors

Making Sense of Sensors, Sensors and More Sensors

Among UX designers, mobile platforms have established priority. Even in brick & mortar locations, user experiences for employees and consumers emulate the mobile experience; with virtual and augmented reality innovations to bridge the gap between the real and the virtual. Banks such as BNP, Wells Fargo and Citi have been experimenting with VR since 2017.

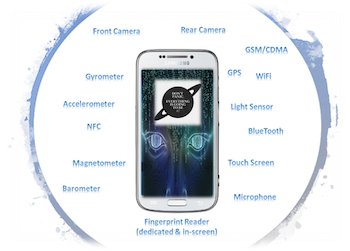

Key to these innovations are the variety and quality of sensors on mobile devices. Even the most basic smartphones are bristling with over 10 different sensors, that are may be used for a variety of functional and authentication applications. Other ‘smart’ devices such as appliances, speakers and possibly the ‘holy grail of sensors’, the automobile with 100’s of sensors are affording innovators with a dizzying array of sources to develop richer, more secure user experiences.

Unstructured Data is Rich Fodder for, dare I say, Artificial Intelligence

Sophisticated pattern recognition algorithms have suddenly made a wide range of unstructured data and analogue media such as audio, pictures, videos, motion, gestures etc. available for processing in a tantalising number of ways. One of the companies doing that is Biocatch, whose behavioural biometrics has profiled about 70 million individuals and monitors the typing, tapping and swiping of over six billion transactions a month. Aerendir mobile has patented a method to measure the users’ physiology through micro-vibrations that are picked up by sesnsors that are already on smartphones. Blending of multiple modalities is also growing, such as ID R&D’s ‘fusion biometrics’ to blend facial and behavioural mobile login and step-up authentication.

Next, multi-modal biometrics are reducing the reliance on complicated registration processes, in which identity is asserted through a process called ‘Ground Truth’; and rather using known behaviours of legitimate users to distinguish between live imposters, and machine-based attacks from the likes of RATs, BOTs and Malware.

What’s more, is that these algorithms, constructed within AI processes, don’t discriminate against the source of the data thereby making to possible to blend diverse datasets in a multiplicity of ways.

Flipping the Script on Sensing and Processing: Edgy bAIometrics

Finally (well, for now at least), edge computing is making it possible for all of this complex processing to happen on-device, either through dedicated chips or low-footprint software. Modality-specific biometrics such as Voice, Finger, Facial are now starting to be replaced by a single engine that is capable of treat all sensor data alike, leveraging common processes and resources for a far more efficient, multi-modal, context aware authentication. We see the potential blurring of all lines between biometric modalities, with “AI Inside”.

Categories: Conversational Intelligence, Intelligent Authentication, Articles

Getting It Right: What AI Agents Actually Mean for Customer Support (Webinar)

Getting It Right: What AI Agents Actually Mean for Customer Support (Webinar)  Beyond the Basics: How AI Is Transforming B2B Sales at TP

Beyond the Basics: How AI Is Transforming B2B Sales at TP  Voice AI Agents Redefine CX: Trends, ROI, and Strategies for 2025

Voice AI Agents Redefine CX: Trends, ROI, and Strategies for 2025  Why Voice AI Is Foundational for Enterprise Innovation (Webinar)

Why Voice AI Is Foundational for Enterprise Innovation (Webinar)