Smartphone users appear to be becoming increasingly familiar with, and accepting of, biometrics to unlock phones or log into apps. Many consumers expect, even sometimes demand, alternatives to PINs, passwords, and tokens as a means to access devices and services.

Still, despite these growing trends, many consumers hesitate to sign-up for mobile biometrics when offered. And some commercial enterprises, especially banking and financial services firms, continue to express skepticism for using mobile biometrics to protect high-value transactions.

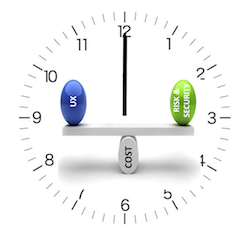

This reluctance to implement and adopt mobile biometrics has historically related to the compounded complexity of risk, usability and cost.

However, as these are no longer emerging technologies and customer digitization strategies advance at a steady pace, the clock is ticking.

However, as these are no longer emerging technologies and customer digitization strategies advance at a steady pace, the clock is ticking.

Opus Research believes that all of these elements have evolved in different ways to become far more acceptable and accessible, including:

- Usability – User acceptance is continually improving due to increasing exposure to biometrics (e.g. access control, border control, and as mentioned above, unlocking phones and apps). This comfort extends beyond usage to allow biometric data to be stored by third parties.

- Risk – Professionals are now evaluating biometrics in relation to other authentication methods, instead of as independent emerging technologies, including a multitude of live deployments with referenceable proof points on accuracy and performance. As risk is about probability and not certainty, biometric probabilities in the form of False Accept (FA) / False Reject (FR) far exceed the performance of older methods.

- Cost – Continued innovation in smartphone screens, fingerprint scanners, cameras and microphones has made biometrics accessible to the billions of smartphone users globally. Together with ‘as a Service’ type offerings on device, hybrid as well as cloud-based, the overall cost of implementation has reduced significantly.

Yet the friction for enterprises to deploy and users to adopt still persists; we believe that this is due to apprehension on a number of fronts including overcoming several key ‘known knowns’ as a means to promote awareness and understanding.

Overcoming the apprehension

The rapid pace of development, together with integrating into complex enterprise architectures, creates immense angst among industry decision-makers and enterprise risk and security professionals. As previously mentioned, doing nothing is not an option.

This makes sense as reputational damage from fraud and data breaches is an overwhelmingly top concern of banking professionals (75%) according to recent Mastercard commissioned study with the University of Oxford. A subsequent white paper, “Mobile Biometrics in Financial Services: A Five Factor Framework” confirms this, with 88% of professionals from the financial services industry expecting to be involved in making key decisions relating to implementing mobile biometrics. Yet it is coupled with a sense of trepidation, with only 36% of business professionals claiming to have adequate experience.

Hence, the best way to move forward is, to coin a well-worn phrase, be “agile and structured” thereby ensuring that large lessons learned exceed small failures.

Biometrics is not new

Many organizations, especially in financial services, already use biometrics shared across fingerprints, facial recognition and voice. Opus Research’s last census of voice biometrics implementations (Nov 2016) revealed that more than 135 million people around the world had enrolled for services that would use their voice to speed up the authentication process. Opus estimates that figure to at least double by end 2017. Clients and customers of banks and financial services companies accounted for over half of the enrolled population, so it was evident that financial services companies around the world are well-acquainted with biometric authentication.

Solutions that provide banking customers the choice to use fingerprints, voice or facial recognition have been showcased by financial services giants such as USAA to so-called “challenger” banks such as Atom Bank in the UK which regards the use of leading-edge technology as a differentiator in an increasingly competitive environment. It is routine for mobile banking and brokerage apps to enable smartphone owners to use their fingerprints instead of passwords to open the app, check balances, make payments and even deposit checks.

LESSONS LEARNED: 6 Key Considerations in Deployment Strategies

Let me caveat this section by saying that

1. Trialing the “latest and greatest” is becoming less relevant

While the technologies continue to evolve and improvements are being released regularly, most of the solutions across biometric modalities have proven use-cases, even those from long-standing OEMs that are a few versions behind deliver adequate performance. While OEMs generally don’t warrant their product performance, there are numerous live implementations that can be relied upon for real-world results. Add to this the ‘as-a-Service’ offerings where enterprises are able to plug-into existing cloud-based infrastructures that are already operational, and the need to test, trial and pilot diminishes even further.

2. Solution performance is not only about biometric accuracy

Biometric modality performance is commonly measured in terms of such False Accept (FA) and False Reject (FR), and often represented as Equal Error Rate (EER) or Receiver Operating Characteristic (ROC). Oxford/Mastercard caution against overstating the importance of accuracy. There is a general tendency for decision makers to gravitate towards these measures due to their relative ease of quantification as well as it being a common basis to compare vendor products. The biometric engine, despite being the heart of the system, is one of many components that make up the overall solution. Various other elements, especially in mobile context, impact on practical performance. These include sensors, environmental noise, channel noise, application usability and user capability, etc. While vendors endeavor to cover as wide a variety of these elements, it is impractical to this this at scale especially due to the distributed nature of mobile biometrics.

3. Establishing Ground-truth is vital, but collaboration is on the way

Ground-truth is the initial process of confirming a user’s identity, prior to registering the user for any future biometric activity. This is an identification process, that may be conducted more than once, and is organization and use-case specific. Current registration methods range from PIN/passwords to security questions to one-time passwords (OTPs) and other hard tokens to physical documents (ID books/cards, driver’s licenses etc.). Most of these are already effective in mobile registrations. The emergence of AI and blockchain has seen the creation of a multitude of federated identity initiatives, many of them government sponsored such as India’s UIDAI, UK’s GOV.UK Verify, DigID Netherlands and public-private partnerships such as SecureKey Concierge in Canada. Social sign on, such as ‘login with Facebook’ is also a commonly adopted method for federating identity assertion.

Views on the practical importance of ground truth has, like biometric accuracy, evolved over time. As biometric templates may be used to trace back to actual individuals, it remains unlikely that a fraudster would go through the trouble of passing a series of registration tests simply to enroll their own, traceable biometric template. It would be simpler to use the same registration loopholes to access the service or other value directly.

4. Proprietary Algorithms is not necessarily Vendor Lock-in

The absence of biometric standards has allowed for the use of proprietary algorithms that are not interchangeable among solution providers. This is especially important for server-based architectures, which are dominant in contact centers. However, the move in mobile appears to be towards on-device and hybrid architectures, in which case, the introduction of mobile handset biometrics linked to the OS reduces this to the device level. The downside, instead, is when devices are changed by users. However, users are quite accepting of the need to re-register for most services when changing devices.

5. Don’t Boil the Interoperability Ocean

5. Don’t Boil the Interoperability Ocean

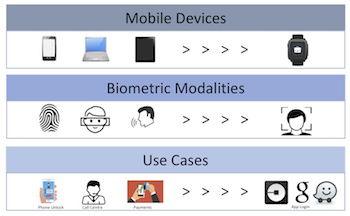

While it is vital to consider a unified cross-channel user service, and ideally provide a suchlike delightful user experience, that may not be necessary in the case of mobile biometrics. Oxford/Mastercard identify [LINK] three types of biometrics systems interoperability when considering user authentication:

Devices : Biometric measured by different devices (e.g., mobile phone, laptop, wearable)Modalities : Different biometric traits interchangeably (e.g., fingerprint, facial recognition)Use cases : different applications (e.g., mobile banking login, payment verification)

And while the survey reveals that at least 66% of industry professionals strongly believe interoperability is vital, this must be balanced this with costs, complexities, risks and, most important, the profile of users and transactions that will actually interoperate across these dimensions, particularly within a single transaction. We therefore counsel that full interoperability is often not necessary, and that all uses be pragmatically evaluated.

6. Favor the most important use-case, and defer the edge-cases

Use cases generally gravitate towards specific applications, and their related channels, interfaces and devices. By way of an extreme example, consider a biometric login to a banking app on a mobile device which may not be interoperable between website login on a PC, or contact centre with a human agent. The chances of such an interaction for login/access as part of a single attempt is a far-edge case that would not yield much return on effort, cost and time.

Also, in a fully device-interoperable solution, the choices between distributed and centralized storage become quite critical, as this affects all interoperability elements. With centralized storage, biometric measurements are provided by a multitude of sensors, which may be manufactured by different OEMs and hence would have different levels of performance, making device interoperability very difficult. The coexistence of poor and high quality sensors forces the biometric system to adapt every sample to the weakest element, allowing adversaries to exploit these weaknesses by via rollback attacks (i.e., attacking weaker/older sensors is easier than newer/stronger ones).

[This excerpt is published with permission from the Biomertic Technology Today – To learn more about how “doing nothing is not an option” when it comes to mobile biometrics, please read the full article in latest edition of Biometric Technology Today.]

Categories: Conversational Intelligence, Intelligent Authentication, Articles, Mobile + Location

Opus Research Welcomes Ian Jacobs as VP and Lead Analyst

Opus Research Welcomes Ian Jacobs as VP and Lead Analyst  United Airlines, TXU Energy, and Memorial Hermann Among Opus Research’s 2024 Conversational AI Award Winners

United Airlines, TXU Energy, and Memorial Hermann Among Opus Research’s 2024 Conversational AI Award Winners  Zoho Fortifies a Formidable Solution Stack With AI

Zoho Fortifies a Formidable Solution Stack With AI  Opus Research “Vendors That Matter” Series: Marchex

Opus Research “Vendors That Matter” Series: Marchex