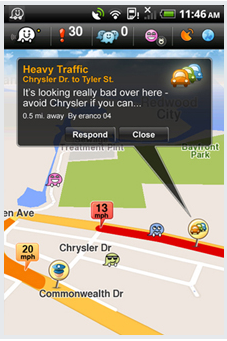

In February (2011) we posted this note about speech enabling Waze, the crowdsourced traffic reporting app for smartphones. At the time, we noted that speech input and output would be a necessity for a truly “hands-free/eyes forward” navigational app for smartphones.

In February (2011) we posted this note about speech enabling Waze, the crowdsourced traffic reporting app for smartphones. At the time, we noted that speech input and output would be a necessity for a truly “hands-free/eyes forward” navigational app for smartphones.

True to their word, the engineers at Waze have steadily added to the app’s voice-based features and functions. As noted in this post in the company’s “By the Waze” blog, TTS-based driving instructions have been a feature of the “Commuting Widget” available for download from the Android Marketplace. Then, on September 22, Nuance Communications let it be known that the voice rendering of navigational advice in the Waze applications for both Android and iOS-based devices was using a cloud-based instantiation of its flagship text-to-speech rendering software called Vocalizer Network as invoked from Nuance Vocalizer Studio.

In a separate, but related story, Nuance also announced that it had retooled the packaging of the services and features of the Nuance Mobile Developer (NDEV-Mobile) program. As described in this press release, the new configuration offers third-party developers three distinct tiers to simplify how they use Nuance’s Dragon speech recognition on multiple mobile platoforms, including Windows Phone 7, in addition to iOS, Android and an http-based Web interface. Since its inception in January 2011, the program has registered over 4,000 mobile app developers and, according to the Nuance Press Release, it “has voice-enabled some of the market’s most popular apps, including Siri, Price Check by Amazon, Ask for iPhone, Merriam-Webster, Dictionary.com, RemoteLink from OnStar, SpeechTrans, Yellow Pages and AirYell from Avantar, iTranslate, Taskmind, SayHi Translate, Vocre, Bon’App” among others.

The ability to voice enable search, navigation and translation has captured the imagination and energy of mobile app developers. But the notion of knitting each function together to act as a personal agent is the ultimate enticement.

Categories: Articles

NiCE Interactions 2025: Agentic AI, Better Data, and a Whole Lot of Partnership

NiCE Interactions 2025: Agentic AI, Better Data, and a Whole Lot of Partnership  Getting It Right: What AI Agents Actually Mean for Customer Support (Webinar)

Getting It Right: What AI Agents Actually Mean for Customer Support (Webinar)  Beyond the Basics: How AI Is Transforming B2B Sales at TP

Beyond the Basics: How AI Is Transforming B2B Sales at TP  Five9 Launches Agentic CX: Toward AI Agents That Reason and Act

Five9 Launches Agentic CX: Toward AI Agents That Reason and Act