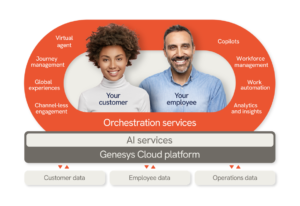

Early in May, Genesys formally introduced a line of AI-infused capabilities packaged as Genesys Cloud AI and referred to as “artificial intelligence for customer service automation.” All told, Genesys brings in GenAI and Conversational AI to power Agent Copilots, Virtual Agent, Empathy Detection and a new “Modern Agent Workspace.” Yet, to me, the most exciting aspect of the announcement is the courage it shows in a transparent treatment of putting a price on the consumption of the output of large language models (LLMs) and Generative AI resources.

Early in May, Genesys formally introduced a line of AI-infused capabilities packaged as Genesys Cloud AI and referred to as “artificial intelligence for customer service automation.” All told, Genesys brings in GenAI and Conversational AI to power Agent Copilots, Virtual Agent, Empathy Detection and a new “Modern Agent Workspace.” Yet, to me, the most exciting aspect of the announcement is the courage it shows in a transparent treatment of putting a price on the consumption of the output of large language models (LLMs) and Generative AI resources.

Determining the cost of GenAI is both complex and highly-dynamic. Amy Stapleton and I tackled some of the thorny issues of GenAI pricing in an ebook entitled “The LLM Conundrum: What Does GenAI Really Cost Your Company?”, issued just prior to Enterprise Connect 2024 in March. In the introduction, we noted that asking what it costs to deploy LLMs and GenAI is “kind of like asking ‘What does a house cost?’, ‘How much is a car?’, or ‘What would you pay for your Labradoodle (if it is, indeed, a real Labradoodle)?’ Ultimately, the price can vary significantly based on your specific requirements.”

A Token for Your Thoughts

To its credit, Genesys’ AI Experience comes with pricing parameters that answer the LLM Conundrum. It had long provided a mechanism for customers to “bring their own technologies” under a “per invocation” model that attached a small fee each time an implementation reached out to Amazon Lex, Google Dialogflow or Nuance Mix. For current Cloud CX subscribers, a “per invocation rate” structure is spelled out here. What is invoked feels a little confusing. It is expressed as a price per turn/minute, translated into what Genesys terms “AI Experience tokens”.

Companies that have licensing agreements with OpenAI or competitors like Anthropic, Cohere, Hugging Face, or Google are already familiar with token-based pricing. They are also well-aware that the price for tokens varies significantly by volume. OpenAI’s fees for API access to GPT-3 (in 2023) showed prices ranged from $0.0006 – $0.06 per 1,000 tokens. Competitive pressures put downward pressure on these tokens, and a “free” option is available for low-volume users. Still, with anticipated spending in the trillions of dollars for new data centers and personnel for continuous training of models, the hyperscalers are going to have to recapture their costs somehow.

In Genesys’ case each token costs $1 (US) a month here in the United States. Every Genesys Cloud subscriber starts each month with $250 worth of free tokens, which can be used as the customer sees fit. In addition to the monthly per agent charge for the selected tier of Cloud CX, there are token calculations for use of multiple features of the Genesys Cloud, including Predictive Engagement (real-time journey analytics), Predictive Routing, bots, and Agent Assist (as illustrated in the table below).

A Stab at Standard Pricing

We have long counseled enterprise decision makers to lean on their technology vendors to package and provide access to LLMs and GenAI resources. In addition to displaying the metered pricing for the features and functions above, Genesys has organized “AI Experience bundles” that include a pre-determined number of bot sessions (authored through its Digital Bot Flows), Predictive Engagement events, and predictive routing interactions. The packages also include unlimited use of tools for managing knowledge bases (Knowledge Workbench), as well as unlimited use of its support center, and Agent Assist, which offers agents potential answers to customer questions.

Bundled pricing brings predictability to the monthly expense associated with LLMs and GenAI. The Genesys Cloud CX AI Experience bundle carries a $60 monthly charge per agent. There is a $40/month/agent for the Genesys Cloud CX AI Experience bundle for Digital only. With the addition of tokens, Genesys now allows customers to consume what they need from the AI capabilities on the Genesys Cloud platform as a single budget item, even if consumption changes over time.

For the Love of Tokens

I grew up loving pinball. I was not a wizard; just an addict. When I was young I didn’t really care if it was a game from Bally, Gottlieb or Williams. I’d go to an arcade and put a stack of quarters on the top glass to stake a claim for a couple hours of fun. When video games from Nintendo and Atari came along, evenings were sucked up with Pacman, Missile Command, Asteroids or Digdug. Something else happened too. Following gaming parlors with quarter slots, video game parlors switched to “Tokens”. That made it a natural for tokens to wend their way into universe of multiplayer games like Fortnite, Minecraft or Grand Theft Auto.

Over the decades, gaming tokens have come to represent in-game assets, like weapons, skins, or new characters. In many instances they simply enable players to earn tokens to extend their lives. When treated as a currency, tokens facilitate interoperability, enabling players to take the assets they’ve earned in one game and move them over to other games in a virtual world. It is not too much of a reach to anticipate the evolution of the word “token” in the world of LLMs and GenAI. Soon you should see expanded presence of consumption and pricing models for large language models (LLMs) that cross platforms and are core to refining GenAi-infused customer and agent experiences, as well as business outcomes.

Categories: Intelligent Assistants, Articles

2025 Conversational AI Intelliview: Decision-Makers Guide to Self-Service & Enterprise Intelligent Assistants

2025 Conversational AI Intelliview: Decision-Makers Guide to Self-Service & Enterprise Intelligent Assistants  Talk to the Web: How NLWeb Opens Conversational Access to Site Content

Talk to the Web: How NLWeb Opens Conversational Access to Site Content  Battling ‘Botenfreude’: The Power of People and Policy

Battling ‘Botenfreude’: The Power of People and Policy  Voice AI Agents Redefine CX: Trends, ROI, and Strategies for 2025

Voice AI Agents Redefine CX: Trends, ROI, and Strategies for 2025