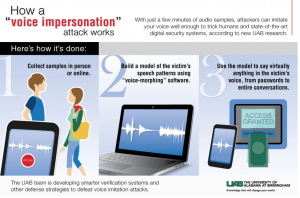

The Voice Identification and Verification (ID&V) community was rattled a bit when a group of researchers at the University of Alabama-Birmingham delivered a paper to the European Symposium on Research in Computer Security asserting that voice authentication systems can be defeated by hackers using “off-the-shelf voice morphing” software. Initial press coverage naturally played up the vulnerability angle. Below is an illustration based on the research shows how easy it is to use a smartphone or any recording device to collect a sample of an individual’s voice:

Professor Nitesh Saxena, director of the Security and Privacy in Emerging computing and networking Systems (SPIES) lab at UAB explained that only a few minutes of audio samples are needed to serve as raw material for supporting creation “voice clone” that can be used to fool other people as well as unspecified automated voice authentication systems. In the teams case study an unspecified “voice-biometrics, or speaker-verification” system showed an 80-90% false acceptance rate, a figure that is way out of line when compared to the efficiency that Opus Research has observed in deployments of systems from the leading voice biometrics vendors. By the way, a sample of 100 users showed that live individuals rejected “morphed voice samples of celebrities as well as somewhat familiar users about half the time.”

So what gives? First of all, I suspect that the experiment involved an untuned voice-based ID&V system. As we noted in a report entitled “Best Practices for Voice Biometrics in the Enterprise”, companies must allocate ample time to create and tune an accurate background model for detecting imposters. Improper and untuned models can lead to unacceptably false acceptance rates. Another important “best practice” is to use multiple factors for authentication. Indeed, it is routine for companies that employ voice biometrics as part of their customer authentication fabric to take into account the originating phone number, the location of the individual making the call and other biometrics, such as facial or fingerprint recognition.

Liveness testing is another big factor in voice-based ID&V. As Matthew J. Schartz notes in this article in BankInfoSecurity.com, Professor Saxena “suspects that the voice attacks his lab has concocted can be blocked, in part, by checking for the presence of a live speaker.” Quoting the researcher, “Ultimately, the best defense of all would be the development of speaker verification systems that can completely resist voice imitation attacks by testing the live presence of a speaker,” he says. Voice biometrics solutions from the leading vendors have proprietary methods for both liveness detection and recognition of synthesized voices, in addition to supporting multi-factor approaches to authentication.

Going forward, professor Saxena said, “Our future research will examine this [liveness testing and use of multipe factors] and other defense strategies.” Opus Research counsels enterprise security officers to do the same.

Categories: Intelligent Authentication

Getting It Right: What AI Agents Actually Mean for Customer Support (Webinar)

Getting It Right: What AI Agents Actually Mean for Customer Support (Webinar)  Beyond the Basics: How AI Is Transforming B2B Sales at TP

Beyond the Basics: How AI Is Transforming B2B Sales at TP  Voice AI Agents Redefine CX: Trends, ROI, and Strategies for 2025

Voice AI Agents Redefine CX: Trends, ROI, and Strategies for 2025  Why Voice AI Is Foundational for Enterprise Innovation (Webinar)

Why Voice AI Is Foundational for Enterprise Innovation (Webinar)